Defining Package ABI Compatibility

Each package recipe can generate N binary packages from it, depending on these three items:

settings, options and requires.

When any of the settings of a package recipe changes, it will reference a different binary:

class MyLibConanPackage(ConanFile):

name = "mylib"

version = "1.0"

settings = "os", "arch", "compiler", "build_type"

When this package is installed by a conanfile.txt, another package conanfile.py, or directly:

$ conan install mylib/1.0@user/channel -s arch=x86_64 -s ...

The process is:

Conan gets the user input settings and options. Those settings and options can come from the command line, profiles or from the values cached in the latest conan install execution.

Conan retrieves the

mylib/1.0@user/channelrecipe, reads thesettingsattribute, and assigns the necessary values.With the current package values for

settings(alsooptionsandrequires), it will compute a SHA1 hash that will serve as the binary package ID, e.g.,c6d75a933080ca17eb7f076813e7fb21aaa740f2.Conan will try to find the

c6d75...binary package. If it exists, it will be retrieved. If it cannot be found, it will fail and indicate that it can be built from sources using conan install --build.

If the package is installed again using different settings, for example, on a 32-bit architecture:

$ conan install mylib/1.0@user/channel -s arch=x86 -s ...

The process will be repeated with a different generated package ID, because the arch

setting will have a different value. The same applies to different compilers, compiler versions, build types. When generating multiple

binaries - a separate ID is generated for each configuration.

When developers using the package use the same settings as one of those uploaded binaries, the computed package ID will be identical causing the binary to be retrieved and reused without the need of rebuilding it from the sources.

The options behavior is very similar. The main difference is that options can be more easily defined at the package level and they can

be defaulted. Check the options reference.

Note this simple scenario of a header-only library. The package does not need to be built, and it will not have any ABI issues at all.

The recipe for such a package will be to generate a single binary package, no more. This is easily achieved by not declaring

settings nor options in the recipe as follows:

class MyLibConanPackage(ConanFile):

name = "mylib"

version = "1.0"

# no settings defined!

No matter the settings are defined by the users, including the compiler or version, the package settings and options will always be the same (left empty) and they will hash to the same binary package ID. That package will typically contain just the header files.

What happens if we have a library that can be built with GCC 4.8 and will preserve the ABI compatibility with GCC 4.9? (This kind of compatibility is easier to achieve for example for pure C libraries).

Although it could be argued that it is worth rebuilding with 4.9 too -to get fixes and performance improvements-. Let’s suppose that we don’t want to create 2 different binaries, but just a single built with GCC 4.8 which also needs to be compatible for GCC 4.9 installations.

Defining a Custom package_id()

The default package_id() uses the settings and options directly as defined, and assumes the

semantic versioning for dependencies is defined in requires.

This package_id() method can be overridden to control the package ID generation. Within the package_id(), we have access to the

self.info object, which is hashed to compute the binary ID and contains:

self.info.settings: Contains all the declared settings, always as string values. We can access/modify the settings, e.g.,

self.info.settings.compiler.version.self.info.options: Contains all the declared options, always as string values too, e.g.,

self.info.options.shared.

Initially this info object contains the original settings and options, but they can be changed without constraints to any other

string value.

For example, if you are sure your package ABI compatibility is fine for GCC versions > 4.5 and < 5.0, you could do the following:

from conans import ConanFile, CMake, tools

from conans.model.version import Version

class PkgConan(ConanFile):

name = "pkg"

version = "1.0"

settings = "compiler", "build_type"

def package_id(self):

v = Version(str(self.settings.compiler.version))

if self.settings.compiler == "gcc" and (v >= "4.5" and v < "5.0"):

self.info.settings.compiler.version = "GCC version between 4.5 and 5.0"

We have set the self.info.settings.compiler.version with an arbitrary string, the value of which is not important (could be any string). The

only important thing is that it is the same for any GCC version between 4.5 and 5.0. For all those versions, the compiler version will

always be hashed to the same ID.

Let’s try and check that it works properly when installing the package for GCC 4.5:

$ conan create . pkg/1.0@myuser/mychannel -s compiler=gcc -s compiler.version=4.5 ...

Requirements

pkg/1.0@myuser/mychannel from local

Packages

pkg/1.0@myuser/mychannel:af044f9619574eceb8e1cca737a64bdad88246ad

...

We can see that the computed package ID is af04...46ad (not real). What happens if we specify GCC 4.6?

$ conan install pkg/1.0@myuser/mychannel -s compiler=gcc -s compiler.version=4.6 ...

Requirements

pkg/1.0@myuser/mychannel from local

Packages

pkg/1.0@myuser/mychannel:af044f9619574eceb8e1cca737a64bdad88246ad

The required package has the same result again af04...46ad. Now we can try using GCC 4.4 (< 4.5):

$ conan install pkg/1.0@myuser/mychannel -s compiler=gcc -s compiler.version=4.4 ...

Requirements

pkg/1.0@myuser/mychannel from local

Packages

pkg/1.0@myuser/mychannel:7d02dc01581029782b59dcc8c9783a73ab3c22dd

The computed package ID is different which means that we need a different binary package for GCC 4.4.

The same way we have adjusted the self.info.settings, we could set the self.info.options values if needed.

If you want to make packages independent on build_type removing the build_type from the package settings in the package_id() will work for OSX and Linux. However when building with Visual studio the compiler.runtime field will change based on the build_type value so in that case you will also want to delete the compiler runtime field like so:

def package_id(self):

if self.settings.os in ["Windows","WindowsStore"] and self.settings.compiler == "Visual Studio":

del self.info.settings.build_type

del self.info.settings.compiler.runtime

See also

Check package_id() to see the available helper methods and change its behavior for things like:

Recipes packaging header only libraries.

Adjusting Visual Studio toolsets compatibility.

Compatible packages

Warning

This is an experimental feature subject to breaking changes in future releases.

The above approach defined 1 package ID for different input configurations. For example, all gcc versions

in the range (v >= "4.5" and v < "5.0") will have exactly the same package ID, no matter what was the gcc version

used to build it. It worked like an information erasure, once the binary is built, it is not possible to know which

gcc was used to build it.

But it is possible to define compatible binaries that have different package IDs. For instance, it

is possible to have a different binary for each gcc version, so the

gcc 4.8 package will be a different one with a different package ID than the gcc 4.9 one, and still define

that you can use the gcc 4.8 package when building with gcc 4.9.

We can define an ordered list of compatible packages, that will be checked in order if the package ID that our profile defines is not available. Let’s see it with an example:

Lets say that we are building with a profile of gcc 4.9. But for a given package we want to

fallback to binaries built with gcc 4.8 or gcc 4.7 if we cannot find a binary built with gcc 4.9.

That can be defined as:

from conans import ConanFile

class Pkg(ConanFile):

settings = "os", "compiler", "arch", "build_type"

def package_id(self):

if self.settings.compiler == "gcc" and self.settings.compiler.version == "4.9":

for version in ("4.8", "4.7"):

compatible_pkg = self.info.clone()

compatible_pkg.settings.compiler.version = version

self.compatible_packages.append(compatible_pkg)

Note that if the input configuration is gcc 4.8, it will not try to fallback to binaries of gcc 4.7 as the

condition is not met.

The self.info.clone() method copies the values of settings, options and requires from the current instance of

the recipe so they can be modified to model the compatibility.

It is the responsibility of the developer to guarantee that such binaries are indeed compatible. For example in:

from conans import ConanFile

class Pkg(ConanFile):

options = {"optimized": [1, 2, 3]}

default_options = {"optimized": 1}

def package_id(self):

for optimized in range(int(self.options.optimized), 0, -1):

compatible_pkg = self.info.clone()

compatible_pkg.options.optimized = optimized

self.compatible_packages.append(compatible_pkg)

This recipe defines that the binaries are compatible with binaries of itself built with a lower optimization value. It can

have up to 3 different binaries, one for each different value of optimized option. The package_id() defines that a binary

built with optimized=1 can be perfectly linked and will run even if someone defines optimized=2, or optimized=3

in their configuration. But a binary built with optimized=2 will not be considered if the requested one is optimized=1.

The binary should be interchangeable at all effects. This also applies to other usages of that configuration. If this example used

the optimized option to conditionally require different dependencies, that will not be taken into account. The package_id()

step is processed after the whole dependency graph has been built, so it is not possible to define how dependencies are resolved

based on this compatibility model, it only applies to use-cases where the binaries can be interchanged.

Note

Compatible packages are a match for a binary in the dependency graph. When a compatible package is found, the --build=missing build policy will not build from sources that package.

Check the Compatible Compilers section to see another example of how to take benefit of compatible packages.

New conanfile.compatibility() method

The conanfile.compatible_packages will be substituted by the new compatibility() experimental method in Conan 2.0. This method allows you to declare compatibility in a similar way:

def compatibility(self):

if self.settings.compiler == "gcc" and self.settings.compiler.version == "4.9":

return [{"settings": [("compiler.version", v)]}

for v in ("4.8", "4.7", "4.6")]

Please, check the compatibility() reference for more information.

Compatible Compilers

Some compilers make use of a base compiler to operate, for example, the intel compiler uses

the Visual Studio compiler in Windows environments and gcc in Linux environments.

The intel compiler is declared this way in the settings.yml:

intel: version: ["11", "12", "13", "14", "15", "16", "17", "18", "19"] base: gcc: <<: *gcc threads: [None] exception: [None] Visual Studio: <<: *visual_studio

Remember, you can extend Conan to support other compilers.

You can use the package_id() method to define the compatibility between the packages generated by the base compiler and the parent one.

You can use the following helpers together with the compatible packages feature to:

Consume native

Visual Studiopackages when the input compiler in the profile isintel(if nointelpackage is available).The opposite, consume an

intelcompiler package when a consumer profile specifiesVisual Studioas the input compiler (if noVisual Studiopackage is available).

base_compatible(): This function will transform the settings used to calculate the package ID into the “base” compiler.def package_id(self): if self.settings.compiler == "intel": p = self.info.clone() p.base_compatible() self.compatible_packages.append(p)

Using the above

package_id()method, if a consumer specifies a profile with a intel profile (-s compiler==”intel”) and there is no binary available, it will resolve to a Visual Studio package ID corresponding to the base compiler.parent_compatible(compiler="compiler", version="version"): This function transforms the settings of a compiler into the settings of a parent one using the specified one as the base compiler. As the details of the “parent” compatible cannot be guessed, you have to provide them as keyword args to the function. The “compiler” argument is mandatory, the rest of keyword arguments will be used to initialize theinfo.settings.compiler.XXXobjects to calculate the correct package ID.def package_id(self): if self.settings.compiler == "Visual Studio": compatible_pkg = self.info.clone() compatible_pkg.parent_compatible(compiler="intel", version=16) self.compatible_packages.append(compatible_pkg)

In this case, for a consumer specifying Visual Studio compiler, if no package is found, it will search for an “intel” package for the version 16.

Take into account that you can use also these helpers without the “compatible packages” feature:

def package_id(self): if self.settings.compiler == "Visual Studio": self.info.parent_compatible(compiler="intel", version=16)

In the above example, we will transform the package ID of the Visual Studio package to be the same as the intel 16, but you won’t

be able to differentiate the packages built with intel with the ones built by Visual Studio because both will have the same package ID,

and that is not always desirable.

Dependency Issues

Let’s define a simple scenario whereby there are two packages: my_other_lib/2.0 and my_lib/1.0 which depends on

my_other_lib/2.0. Let’s assume that their recipes and binaries have already been created and uploaded to a Conan remote.

Now, a new release for my_other_lib/2.1 is released with an improved recipe and new binaries. The my_lib/1.0 is modified and is required to be upgraded to my_other_lib/2.1.

Note

This scenario will be the same in the case that a consuming project of my_lib/1.0 defines a dependency to my_other_lib/2.1, which

takes precedence over the existing project in my_lib/1.0.

The question is: Is it necessary to build new my_lib/1.0 binary packages? or are the existing packages still valid?

The answer: It depends.

Let’s assume that both packages are compiled as static libraries and that the API exposed by my_other_lib to my_lib/1.0 through the

public headers, has not changed at all. In this case, it is not required to build new binaries for my_lib/1.0 because the final consumer will

link against both my_lib/1.0 and my_other_lib/2.1.

On the other hand, it could happen that the API exposed by my_other_lib in the public headers has changed, but without affecting the

my_lib/1.0 binary for any reason (like changes consisting on new functions not used by my_lib). The same reasoning would apply if MyOtherLib was only the header.

But what if a header file of my_other_lib -named myadd.h- has changed from 2.0 to 2.1:

int addition (int a, int b) { return a - b; }

int addition (int a, int b) { return a + b; }

And the addition() function is called from the compiled .cpp files of my_lib/1.0?

Then, a new binary for my_lib/1.0 is required to be built for the new dependency version. Otherwise it will maintain the old, buggy

addition() version. Even in the case that my_lib/1.0 doesn’t have any change in its code lines neither in the recipe, the resulting

binary rebuilding my_lib requires my_other_lib/2.1 and the package to be different.

Using package_id() for Package Dependencies

The self.info object has also a requires object. It is a dictionary containing the necessary information for each requirement, all direct

and transitive dependencies. For example, self.info.requires["my_other_lib"] is a RequirementInfo object.

Each

RequirementInfohas the following read only reference fields:full_name: Full require’s name, e.g., my_other_libfull_version: Full require’s version, e.g., 1.2full_user: Full require’s user, e.g., my_userfull_channel: Full require’s channel, e.g., stablefull_package_id: Full require’s package ID, e.g., c6d75a…

The following fields are used in the

package_id()evaluation:name: By default same value as full_name, e.g., my_other_lib.version: By default the major version representation of thefull_version. E.g., 1.Y for a 1.2full_versionfield and 1.Y.Z for a 1.2.3full_versionfield.user: By defaultNone(doesn’t affect the package ID).channel: By defaultNone(doesn’t affect the package ID).package_id: By defaultNone(doesn’t affect the package ID).

When defining a package ID for model dependencies, it is necessary to take into account two factors:

The versioning schema followed by our requirements (semver?, custom?).

The type of library being built or reused (shared (.so, .dll, .dylib), static).

Versioning Schema

By default Conan assumes semver compatibility. For example, if a version changes from minor 2.0 to 2.1, Conan will assume that the API is compatible (headers not changing), and that it is not necessary to build a new binary for it. This also applies to patches, whereby changing from 2.1.10 to 2.1.11 doesn’t require a re-build.

If it is necessary to change the default behavior, the applied versioning schema can be customized within the package_id() method:

from conans import ConanFile, CMake, tools

from conans.model.version import Version

class PkgConan(ConanFile):

name = "my_lib"

version = "1.0"

settings = "os", "compiler", "build_type", "arch"

requires = "my_other_lib/2.0@lasote/stable"

def package_id(self):

myotherlib = self.info.requires["my_other_lib"]

# Any change in the MyOtherLib version will change current Package ID

myotherlib.version = myotherlib.full_version

# Changes in major and minor versions will change the Package ID but

# only a MyOtherLib patch won't. E.g., from 1.2.3 to 1.2.89 won't change.

myotherlib.version = myotherlib.full_version.minor()

Besides version, there are additional helpers that can be used to determine whether the channel and user of one dependency also

affects the binary package, or even the required package ID can change your own package ID.

You can determine if the following variables within any requirement change the ID of your binary package using the following modes:

Modes / Variables |

|

|

|

|

|

RREV |

PREV |

|---|---|---|---|---|---|---|---|

|

Yes |

Yes, only > 1.0.0 (e.g., 1.2.Z+b102) |

No |

No |

No |

No |

No |

|

Yes |

Yes, only > 1.0.0 (e.g., 1.2.Z+b102) |

No |

No |

No |

No |

No |

|

Yes |

Yes (e.g., 1.2.Z+b102) |

No |

No |

No |

No |

No |

|

Yes |

Yes (e.g., 1.2.Z+b102) |

No |

No |

No |

No |

No |

|

Yes |

Yes (e.g., 1.2.3+b102) |

No |

No |

No |

No |

No |

|

Yes |

Yes (e.g., 1.7+b102) |

No |

No |

No |

No |

No |

|

Yes |

Yes (e.g., 1.2.3+b102) |

No |

No |

No |

No |

No |

|

Yes |

Yes (e.g., 1.2.3+b102) |

Yes |

Yes |

No |

No |

No |

|

Yes |

Yes (e.g., 1.2.3+b102) |

Yes |

Yes |

Yes |

No |

No |

|

No |

No |

No |

No |

No |

No |

No |

|

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

No |

|

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

Yes |

All the modes can be applied to all dependencies, or to individual ones:

def package_id(self): # apply semver_mode for all the dependencies of the package self.info.requires.semver_mode() # use semver_mode just for MyOtherLib self.info.requires["MyOtherLib"].semver_mode()

semver_direct_mode(): This is the default mode. It usessemver_mode()for direct dependencies (first level dependencies, directly declared by the package) andunrelated_mode()for indirect, transitive dependencies of the package. It assumes that the binary will be affected by the direct dependencies, which they will already encode how their transitive dependencies affect them. This might not always be true, as explained above, and that is the reason it is possible to customize it.In this mode, if the package depends on “MyLib”, which transitively depends on “MyOtherLib”, the mode means:

my_lib/1.2.3@user/testing => my_lib/1.Y.Z my_other_lib/2.3.4@user/testing =>

So the direct dependencies are mapped to the major version only. Changing its channel, or using version

my_lib/1.4.5will still producemy_lib/1.Y.Zand thus the same package-id. The indirect, transitive dependency doesn’t affect the package-id at all.

Important

Known-bug: Package ID mode semver_direct_mode takes into account the options of transitive requirements.

It means that modifying the options of any transitive requirement will modify the computed package ID, and also

adding/removing a transitive requirement will modify the computed package ID (this happens even if the added/removed

requirement doesn’t have any option).

semver_mode(): In this mode, only a major release version (starting from 1.0.0) changes the package ID. Every version change prior to 1.0.0 changes the package ID, but only major changes after 1.0.0 will be applied.def package_id(self): self.info.requires["my_other_lib"].semver_mode()

This results in:

my_lib/1.2.3@user/testing => my_lib/1.Y.Z my_other_lib/2.3.4@user/testing => my_other_lib/2.Y.Z

In this mode, versions starting with

0are considered unstable and mapped to the full version:my_lib/0.2.3@user/testing => my_lib/0.2.3 my_other_lib/0.3.4@user/testing => my_other_lib/0.3.4

major_mode(): Any change in the major release version (starting from 0.0.0) changes the package ID.def package_id(self): self.info.requires["MyOtherLib"].major_mode()

This mode is basically the same as

semver_mode, but the only difference is that major versions0.Y.Z, which are considered unstable by semver, are still mapped to only the major, dropping the minor and patch parts.minor_mode(): Any change in major or minor (not patch nor build) version of the required dependency changes the package ID.def package_id(self): self.info.requires["my_other_lib"].minor_mode()

patch_mode(): Any changes to major, minor or patch (not build) versions of the required dependency change the package ID.def package_id(self): self.info.requires["my_other_lib"].patch_mode()

base_mode(): Any changes to the base of the version (not build) of the required dependency changes the package ID. Note that in the case of semver notation this may produce the same result aspatch_mode(), but it is actually intended to dismiss the build part of the version even without strict semver.def package_id(self): self.info.requires["my_other_lib"].base_mode()

full_version_mode(): Any changes to the version of the required dependency changes the package ID.def package_id(self): self.info.requires["my_other_lib"].full_version_mode()

my_other_lib/1.3.4-a4+b3@user/testing => my_other_lib/1.3.4-a4+b3

full_recipe_mode(): Any change in the reference of the requirement (user & channel too) changes the package ID.def package_id(self): self.info.requires["my_other_lib"].full_recipe_mode()

This keeps the whole dependency reference, except the package-id of the dependency.

my_other_lib/1.3.4-a4+b3@user/testing => my_other_lib/1.3.4-a4+b3@user/testing

full_package_mode(): Any change in the required version, user, channel or package ID changes the package ID.def package_id(self): self.info.requires["my_other_lib"].full_package_mode()

Any change to the dependency, including its binary package-id, will in turn produce a new package-id for the consumer package.

MyOtherLib/1.3.4-a4+b3@user/testing:73b..fa56 => MyOtherLib/1.3.4-a4+b3@user/testing:73b..fa56

unrelated_mode(): Requirements do not change the package ID.def package_id(self): self.info.requires["MyOtherLib"].unrelated_mode()

recipe_revision_mode(): The full reference and the package ID of the dependencies, pkg/version@user/channel#RREV:pkg_id (including the recipe revision), will be taken into account to compute the consumer package IDmypkg/1.3.4@user/testing#RREV1:73b..fa56#PREV1 => mypkg/1.3.4-a4+b3@user/testing#RREV1

def package_id(self): self.info.requires["mypkg"].recipe_revision_mode()

package_revision_mode(): The full package reference pkg/version@user/channel#RREV:ID#PREV of the dependencies, including the recipe revision, the binary package ID and the package revision will be taken into account to compute the consumer package IDThis is the most strict mode. Any change in the upstream will produce new consumers package IDs, becoming a fully deterministic binary model.

# The full reference of the dependency package binary will be used as-is mypkg/1.3.4@user/testing#RREV1:73b..fa56#PREV1 => mypkg/1.3.4@user/testing#RREV1:73b..fa56#PREV1

def package_id(self): self.info.requires["mypkg"].package_revision_mode()

Note

Version ranges are not used to calculate the package_id only the resolved version in the graph is used

You can also adjust the individual properties manually:

def package_id(self):

myotherlib = self.info.requires["MyOtherLib"]

# Same as myotherlib.semver_mode()

myotherlib.name = myotherlib.full_name

myotherlib.version = myotherlib.full_version.stable() # major(), minor(), patch(), base, build

myotherlib.user = myotherlib.channel = myotherlib.package_id = None

# Only the channel (and the name) matters

myotherlib.name = myotherlib.full_name

myotherlib.user = myotherlib.package_id = myotherlib.version = None

myotherlib.channel = myotherlib.full_channel

The result of the package_id() is the package ID hash, but the details can be checked in the

generated conaninfo.txt file. The [requires], [options] and [settings] are taken

into account when generating the SHA1 hash for the package ID, while the [full_xxxx] fields show the

complete reference information.

The default behavior produces a conaninfo.txt that looks like:

[requires]

MyOtherLib/2.Y.Z

[full_requires]

MyOtherLib/2.2@demo/testing:73bce3fd7eb82b2eabc19fe11317d37da81afa56

Changing the default package-id mode

It is possible to change the default semver_direct_mode package-id mode, in the

conan.conf file:

[general]

default_package_id_mode=full_package_mode

Possible values are the names of the above methods: full_recipe_mode, semver_mode, etc.

Note

The default_package_id_mode is a global configuration. It will change how all the package-ids are

computed, for all packages. It is impossible to mix different default_package_id_mode values.

The same default_package_id_mode must be used in all clients, servers, CI, etc., and it cannot

be changed without rebuilding all packages.

Note that the default package-id mode is the mode that is used when the package is initialized

and before package_id() method is called. You can still define full_package_mode

as default in conan.conf, but if a recipe declare that it is header-only, with:

def package_id(self): self.info.clear() # clears requires, but also settings if existing # or if there are no settings/options, this would be equivalent self.info.requires.clear() # or self.info.requires.unrelated_mode()

That would still be executed, changing the “default” behavior, and leading to a package that only generates 1 package-id for all possible configurations and versions of dependencies.

Remember that conan.conf can be shared and installed with conan config install.

Take into account that you can combine the compatible packages with the package-id modes.

For example, if you are generating binary packages with the default recipe_revision_mode,

but you want these packages to be consumed from a client with a different mode activated,

you can create a compatible package transforming the mode to recipe_revision_mode so the package

generated with the recipe_revision_mode can be resolved if no package for the default mode is found:

from conans import ConanFile

class Pkg(ConanFile):

...

def package_id(self):

p = self.info.clone()

p.requires.recipe_revision_mode()

self.compatible_packages.append(p)

Enabling full transitivity in package_id modes

Warning

This will become the default behavior in the future (Conan 2.0). It is recommended to activate it when possible (it might require rebuilding some packages, as their package IDs will change)

When a package declares in its package_id() method that it is not affected by its dependencies, that will propagate down

to the indirect consumers of that package. There are several ways this can be done, self.info.clear(), self.info.requires.clear(),

self.info.requires.remove["dep"] and self.info.requires.unrelated_mode(), for example.

Let’s assume for the discussion that it is a header only library, using the self.info.clear() helper. This header only package has

a single dependency, which is a static library. Then, downstream

consumers of the header only library that uses a package mode different from the default, should be also affected by the upstream

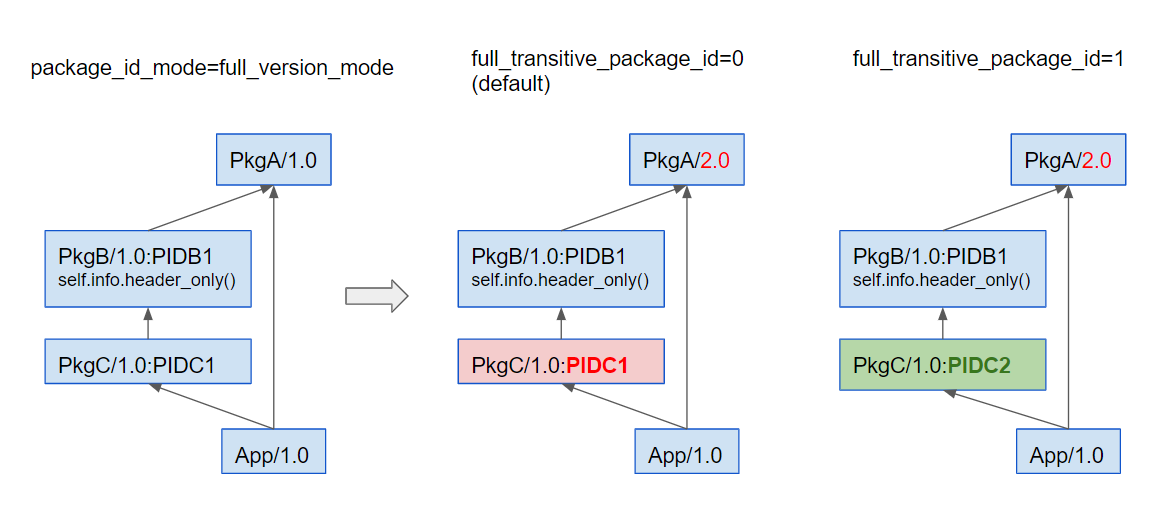

transitivity dependency. Lets say that we have the following scenario:

app/1.0depends onpkgc/1.0andpkga/1.0pkgc/1.0depends only onpkgb/1.0pkgb/1.0depends onpkga/1.0, and definesself.info.clear()in itspackage_id()We are using

full_version_modeNow we create a new

pkga/2.0that has some changes in its header, that would require to rebuildpkgc/1.0against it.app/1.0now depends on`pkgc/1.0andpkga/2.0

With the default behavior, the header only pkgb is isolating pkgc from the upstream changes effects. The package-id PIDC1 we

get for pkgc/1.0 is exactly the same when depending on pkga/1.0 and pkga/2.0.

If we want to have the full_version_mode to be fully transitive, irrespective of the local package-id modes of the packages,

we can configure it in the conan.conf section. To summarize, you can activate the general.full_transitive_package_id

configuration ($ conan config set general.full_transitive_package_id=1).

If we do this, then pkgc/1.0 will compute 2 different package-ids, one for pkga/1.0 (PIDC1) and the other to link with

pkga/2.0 (PIDC2).